Hex CRC Calculator

Understanding Hexadecimal CRC Calculation

A hex CRC calculator is an essential tool for data integrity verification in computing systems, particularly when working with hexadecimal data formats. CRC (Cyclic Redundancy Check) algorithms generate checksums by performing polynomial division on input data, creating a mathematical fingerprint that detects transmission errors, storage corruption, and data tampering.

The hexadecimal number system, using base-16 digits (0-9, A-F), provides an efficient way to represent binary data in a compact, human-readable format. When combined with CRC algorithms, hex representation becomes invaluable for network protocols, embedded systems, and firmware validation. Understanding how to calculate CRC values from hex input requires mastering both hexadecimal mathematics and polynomial division principles.

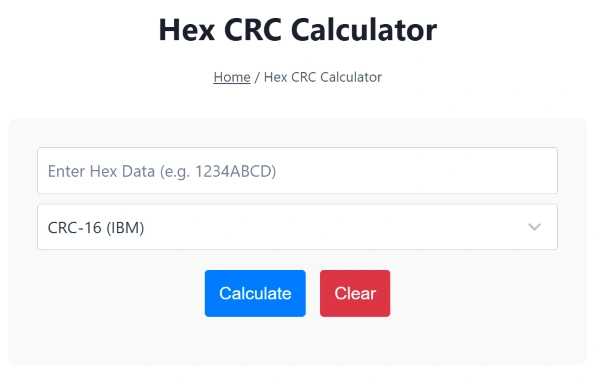

The Hex CRC Calculator image is shown below:

Core CRC Algorithm Implementation for Hex Data

CRC calculation begins with treating input data as a large binary number, which is then divided by a predetermined polynomial. The remainder from this division becomes the CRC checksum. For hexadecimal input, each hex digit represents exactly four binary bits, making conversion straightforward. Our online hex calculator can help verify polynomial coefficients and perform the necessary arithmetic operations during manual calculations.

The most commonly used CRC standards include CRC-8, CRC-16, and CRC-32, each offering different levels of error detection capability. CRC-16/MODBUS, for instance, uses the polynomial 0x8005 and is widely implemented in industrial communication protocols. Meanwhile, CRC-32/IEEE 802.3 employs the polynomial 0x04C11DB7 for Ethernet frame validation.

Hex Input Processing and Format Handling

Professional hex CRC calculators must accommodate various input formats. Raw hex strings like “deadbeef” represent the most basic format, while space-separated bytes (“de ad be ef”) improve readability for longer data sequences. Network protocols often use colon-separated format (“de:ad:be:ef”), particularly for MAC addresses and hardware identifiers.

Input validation becomes crucial when processing hex data. Invalid characters must be rejected, while case sensitivity should be handled gracefully. Many systems require specific byte ordering (little-endian vs big-endian), affecting how hex strings are interpreted during CRC calculation. When working with text-based data, our ASCII to hex converter can prepare input for CRC processing.

Practical Applications in Network and Embedded Systems

Network protocols extensively use hex CRC for packet validation. Ethernet frames include CRC-32 checksums calculated from hex-represented frame data, ensuring transmission integrity across network segments. TCP and UDP protocols also employ checksums, though these use different algorithms optimized for header validation.

Embedded systems rely heavily on hex CRC for firmware integrity checking. Intel HEX files, containing program code and data, include CRC validation to prevent corrupted firmware installation. Bootloaders verify these checksums before executing uploaded code, preventing system crashes from corrupted data. For binary analysis of firmware, our hex to binary converter provides essential bit-level visualization.

IP address validation represents another critical application. Network administrators often work with hex-encoded IP addresses, requiring CRC validation for routing table integrity. Our specialized IP to hex converter and hex to IP converter streamline this workflow, enabling seamless conversion between formats during network analysis.

Advanced CRC Configuration and Customization

Beyond standard CRC implementations, custom polynomial configuration allows specialized error detection tailored to specific applications. Polynomial selection affects detection capability, with certain polynomials optimizing for burst error detection while others excel at random error identification.

Initial seed values and final XOR masks provide additional customization options. These parameters modify the basic CRC algorithm, creating unique checksums for proprietary protocols or enhanced security requirements. The reflection option controls bit ordering within input bytes, accommodating different hardware architectures and communication standards.

Performance optimization becomes important for high-throughput applications. Lookup table generation trades memory for speed, pre-calculating CRC values for all possible byte combinations. This approach dramatically accelerates CRC calculation for large data sets, making real-time validation feasible in demanding applications.

Integration with Data Encoding and Legacy Systems

Modern systems often handle multiple data encoding formats simultaneously. Base64-encoded data, common in web applications and email systems, requires conversion to hex before CRC calculation. Our Base64 to hex converter and hex to Base64 converter enable seamless workflow integration for encoded data validation.

Legacy systems frequently use octal representation alongside hexadecimal formats. Cross-platform compatibility demands supporting both formats, with transparent conversion between numbering systems. The octal to hex converter and hex to octal converter provide essential functionality for maintaining compatibility with older systems while implementing modern CRC validation.

FAQs About Hex CRC Calculator

Q1: Can calculators handle custom byte widths?

A1: Yes, advanced calculators can process customized byte widths for specialized applications, though most handle standard 8-bit bytes.

Q2: How does input size affect CRC speed?

A2: Calculation time increases with data size if done bitwise, but lookup tables keep computation fast regardless of input length.

Q3: Is CRC secure for authentication?

A3: CRC is intended for error detection, not security. Use cryptographic hash functions for authentication or tamper-proofing.

Q4: Can CRC be combined with other checksums?

A4: Yes, combining CRC with other checksums or hashes improves overall validation and security in complex workflows.

Q5: What happens with invalid hex input?

A5: Calculators will typically reject invalid or mis formatted hex data and prompt the user to correct errors for accurate results.

Q6: Do calculators support batch processing?

A6: Some provide batch or API features for automating mass data validation across multiple files or data streams.